ExaNode explained – Why do we need ever faster computing?

High performance computing (HPC) is an important tool for science, industry and decision making. Today’s fastest computers are already incredibly powerful, yet many scientific and industrial challenges demand still more computing power, for instance climate research, drug discovery and material design.

What is Exascale computing?

The speed of a computer is typically measured by the number of arithmetic operations per second it can perform (floating point operations per second or FLOPS). As of summer 2017, the fastest computers have reached a speed of 93 PetaFLOPS (on a standard benchmark), which is 93×1015 (or 93,000,000,000,000,000) operations per second (see box). A system delivering one ExaFLOPS would be more than 10 x faster (with at least 1018 operations per second[1]). In comparison, a standard desktop computer reaches a computing performance in the GigaFLOPS range (one GigaFLOPS is 109 operations per second). Such an Exascale computer would be roughly more powerful by a factor of one billion.

If (by way of analogy) we let the speed of an Exascale computer correspond to the speed of a moon rocket (which was around 40,000 km/h for Apollo 10), then the computing performance of a typical desktop computer would correspond to about 1/10 of the speed of a snail (which crawls around 3 metres per hour).

Compared to a snail, a space rocket is even slower than an Exascale system compared to a desktop computer.

Application examples:

On the road to Exascale

Because of both the importance of increasing the speed to the Exascale FLOPS level and the difficulties encountered in building such computers (see below), the EU (like other big economies) has launched (within its European Cloud Initiative) a research program for the development of Exascale technology. Member states have launched a cooperation for the deployment of Exascale computers until 2022. The ETP4HPC think tank & advisory group has defined a research agenda to overcome the multiple difficulties of building and operating Exascale class systems.

Giga, Peta, Exa & Co

Why building fast computers is hard

One could naively think that just connecting enough standard computers and memory would result in a fast enough system. However, this is not true. Just putting 1,000 cars or car engines together will neither produce a car 1,000 x as fast nor lead to a usable system 1,000 x as powerful, not to speak of noise, pollution and fuel consumption. Instead, different approaches would be necessary to reach those goals.

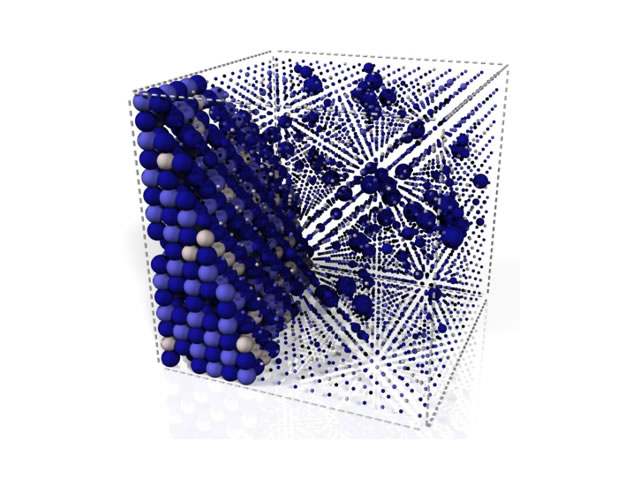

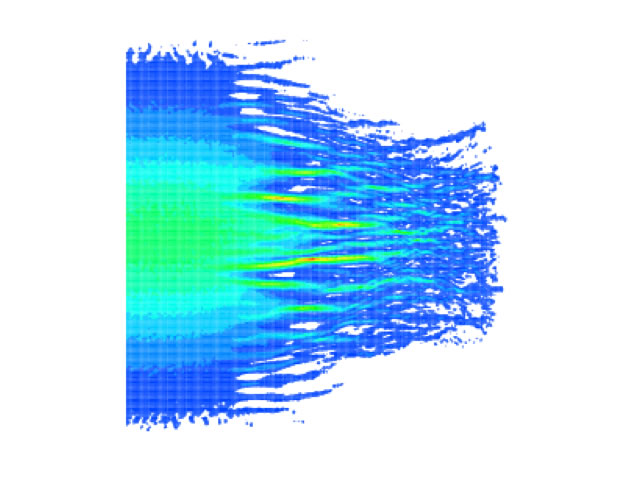

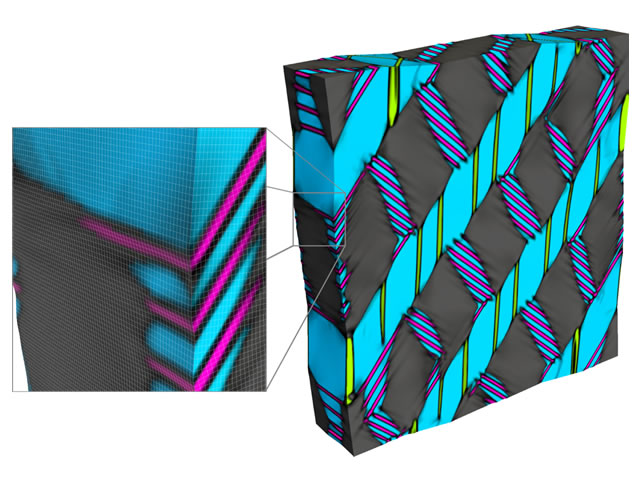

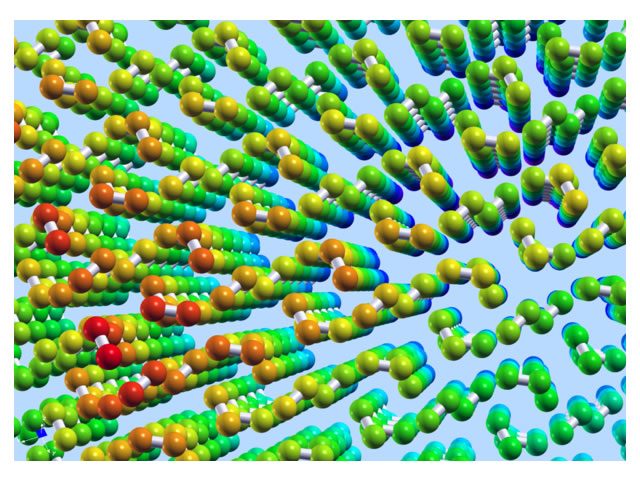

Similar barriers exist for computers. At the most basic level, a computer works by moving (“loading”) data from memory to a compute unit, calculating the results (“process”) and moving (“store”) the results back to memory. If using standard PC technology for the connection between memory and compute units, the sole movement of data for an Exascale computer would roughly need the same level of power as the whole of the UK. Thus, a promising approach to build Exascale machines is to store data very close to the compute unit where they are needed. One technique to put data closer to the processing unit is to extend the traditional two-dimensional layout of electric circuits by stacking integrated circuits also in the third dimension. This will not only consume much less energy, but will also be much faster: Nowadays, the transport of data has become a major constraint on processing speed (much more than the computing itself), similar to the speed difference between slow airport security checks and fast flights. An Exascale computer would need hundreds of thousands if not millions of units comprising processing and memory, and fast connections between them, for data that is needed in more than one place. However, designing and producing such novel compute units is very expensive, so it is of paramount importance to find ways to use components which can be mass produced (and used outside the HPC world) to reduce cost.

How does ExaNoDe address (some of) these challenges?

ExaNoDe has developed the core technology to build low-power, low cost units featuring a denser packing of integrated circuits in order to build prototypes of larger components (compute nodes), which can eventually be used to build up larger parts of an Exascale computer. This approach has the potential to significantly reduce manufacturing and power costs. ExaNoDe has also shown how efficient programs for such hardware can be developed: A global memory address space helps the programmer to locate the data in this vast computer, much like a GPS provides a universal way to specify locations on earth. And analogous to the role of traffic signs and maps that help avoid traffic jams, established frameworks for HPC software development (like the Message Passing Interface) have been adapted to the new design of the hardware.

[1] 1018 corresponds to a number with 18 zeros: 1018 = 1,000,000,000,000,000,000 = 1 billion billions